Introduction

The operation of sorting a dataset is one of the most fundamental of all operations studied in computer science. Literally trillions of sort operations are performed each day worldwide, more if one counts operations where a relatively small set of elements are merged into a larger set.

Many sorting algorithms are in use, including special routines for datasets of a certain size, and other routines optimized for specific hardware platforms and types of data.

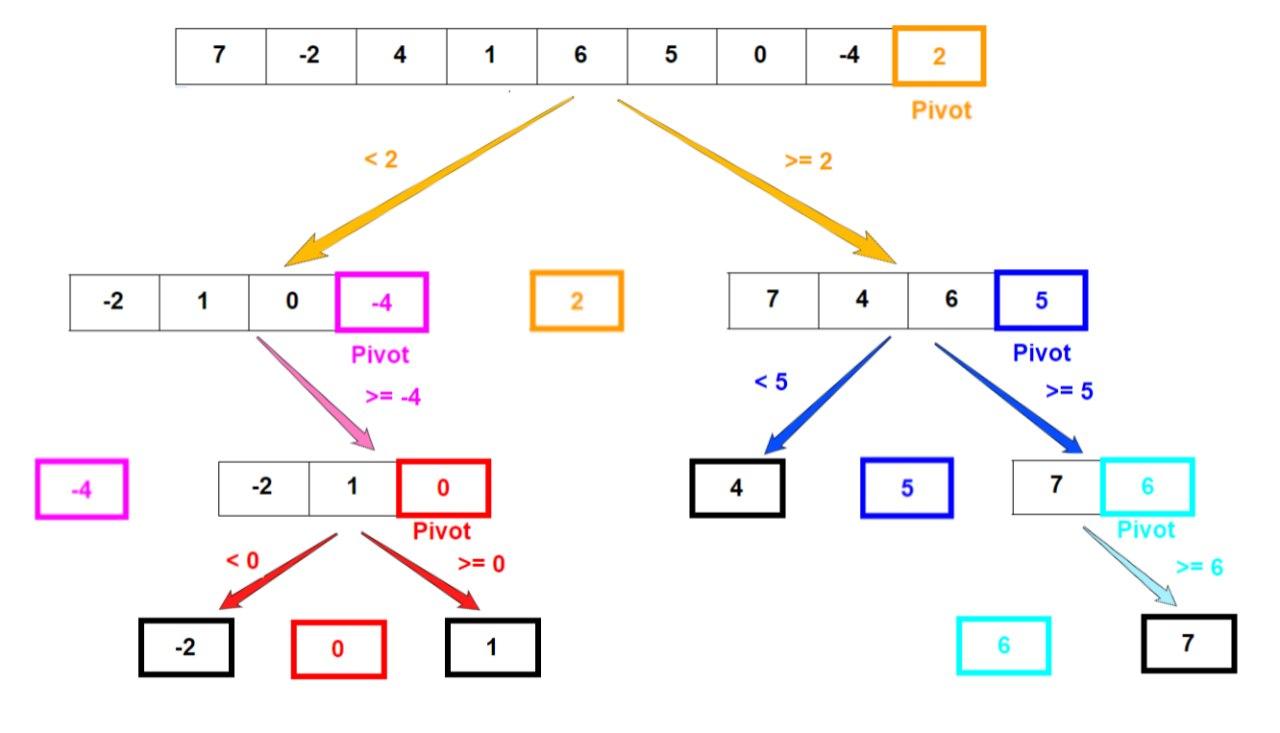

One relatively simple algorithm, which is actually quite efficient, is the “quicksort” algorithm. It may be simply stated as follows: Given a set of elements (integers, real numbers or alphabetical data, for sake of illustration), select some element as the “pivot.” It typically does not matter much which one is selected — some implementations select an element at random. Then partition the set, moving all elements that compare less than or equal to the pivot to the left, and all those that compare greater than the pivot to the right. Now apply this same scheme to each of the two subsets, continuing until all sets have only one element, so that the set is completely ordered. See the illustration of the quicksort algorithm to the right.

DeepMind’s recent successes

Before continuing, it is worth reviewing some of the remarkable successes achieved by the DeepMind organization, a research subsidiary of Alphabet, the parent company of Google, headquartered in London, UK.

Go playing board

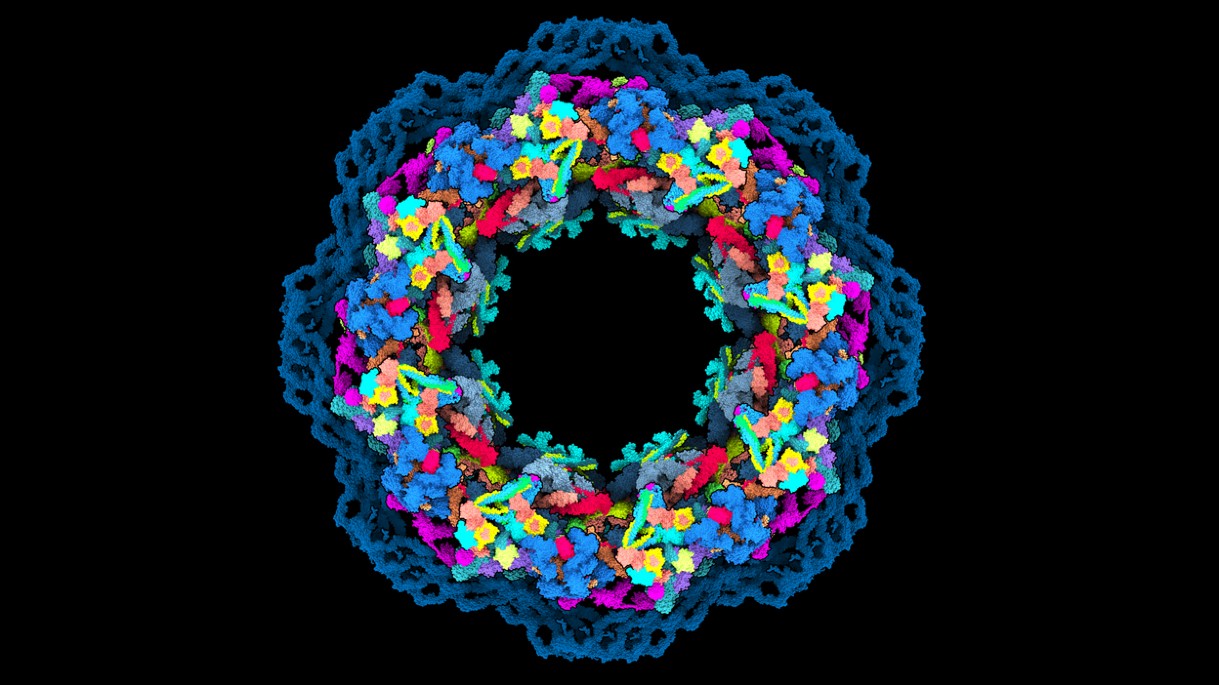

Model of human nuclear pore complex, built using AlphaFold2; credit: Agnieszka Obarska-Kosinska, Nature

Given the daunting challenge and importance of the protein folding problem, in 1994 a community of researchers in the field organized a biennial competition known as Critical Assessment of Protein Structure Prediction (CASP) [CASP]. In 2018, a program called AlphaFold, developed by DeepMind, won the competition. For the 2020 CASP competition, the DeepMind team developed a new program, known as AlphaFold 2 [AlphaFold 2], which achieved a 92% average score, far above the 62% achieved by the second-best program in the competition. “It’s a game changer,” exulted German biologist Andrei Lupas. “This will change medicine. It will change research. It will change bioengineering. It will change everything.” [Scientific American].

DeepMind’s AlphaTensor program for matrix multiplication

Building on these earlier successes, in 2022 researchers at DeepMind turned their tools to the analysis of matrix multiplication algorithms. They formulated the problem as a game, called TensorGame, where at each step the player selects how to combine different entries of the matrices to produce a matrix product. The scheme assigns a score for each selection, based on the number of operations required to reach a correct result.

To find optimal solutions to this problem, the researchers applied a “deep reinforcement learning” algorithm. The resulting program, called AlphaTensor, is a specialized neural network system employing a similar underlying design as the above-mentioned Go-playing and protein folding projects. The scheme employs a single agent to decompose matrix multiplication tensors of different sizes, which permits “learned” matrix multiplication techniques to be shared across different “players.” Full details of the scheme are described in a October 2022 Nature article by the DeepMind researchers.

The AlphaTensor program quickly discovered many of the existing matrix multiplication algorithm, including Strassen’s original algorithm and numerous others. But it also discovered several new algorithms not previously known in the literature. For example, for the product of two $4n \times 4n$ matrices, the previously most efficient algorithm was simply to apply Strassen’s algorithm recursively twice. This approach yields the result in $49$ block multiplications of size $n \times n$. AlphaTensor found a scheme that produces the result in $47$ block multiplications (although this result is valid only for matrices with binary elements). The DeepMind paper presented this scheme in full — it required a full page of small type. Similarly, for the product of two $5n \times 5n$ matrices, AlphaTensor found a scheme that produces the result in $96$ block multiplications of size $n \times n$, compared with $98$ in the literature (with the same restriction as mentioned above for $4n \times 4n$ matrices). Several other new results are listed in their paper, including three cases where their algorithms are applicable for standard real or complex matrices as well as for binary matrices.

The DeepMind researchers note that their results are focused on finding algorithms that minimize the total number of element-wise arithmetic operations in a matrix multiplication task. But the same AlphaTensor technology could also be used to optimize for other criteria, such as numerical stability or energy usage. And more generally, their overall methodology could be applied to a broad range of other mathematical problems as well. For additional details, see this earlier Math Scholar article.

DeepMind’s sorting achievement

DeepMind’s latest achievement is even more remarkable: efficient algorithms for sorting and hashing that are in some cases even more efficient than the best human efforts. In particular, the DeepMind researchers applied their general strategies, used for example in AlphaZero, to sorting, leading to a system they have dubbed AlphaDev.

The DeepMind researchers first applied AlphaDev to very small datasets — only say five elements. The program combined both deliberation and “intuition” to select its moves, as in playing Go or some other board game. At each decision point, the program may select one of four types of actions: comparing values, moving values, or branching to a different part of the program. After each step, it evaluates its performance and receives a “reward” based on how many items in the dataset were sorted correctly. The program continues until either the dataset is completely sorted or the program exceeds some preset length limit.

Once the program mastered very small sets, the same approach was unleashed for larger sets.

The bottom line is that AlphaDev’s best algorithms achieved up to a whopping 71% savings in run time on certain problems, compared with the best human efforts. The researchers noted, however, that for large datasets the savings were more modest, typically only 1-2%.

The researchers were very pleasantly surprised at the outcome: Daniel Mankowitz of DeepMind, who led the research team, said, “We were a bit shocked. … We didn’t believe it at first.” (see Nature article). Emma Bruskill of Stanford University (who was not part of the effort) added, “This is an exciting result.”

The authors mentioned that their algorithms have been integrated into the LLVM standard C++ sort library, and thus are already being used worldwide. For additional details, see the Nature synopsis, or the complete Nature technical article.

Where will this lead?

As we noted in an earlier Math Scholar article, schemes based on machine learning methods are doing much more than finding new mathematical or computer science results. They are also driving cars, writing code, investing money, creating new digital art, and even writing essays.

One of the most startling recent advances is a language model program called “Generative Pre-Trained Transformer” (GPT-3 is currently in use; the latest version is GPT-4), developed by OpenAI, an organization established by some prominent Silicon Valley technologists. Given a prompt, this program can generate surprisingly realistic text on a wide variety of topics. For example, when given the prompt “Write an essay discussing the role of metafiction in the work of Italo Calvino,” GPT-3 produced a five-paragraph essay that started [NY Times]:

Italian author Italo Calvino is considered a master of metafiction, a genre of writing in which the author breaks the fourth wall to discuss the act of writing itself. For Calvino, metafiction is a way of exploring the nature of reality and the ways in which stories can shape our perceptions of the world. His novels often incorporate playful, labyrinthine structures that play with the boundaries between reality and fiction. In “If on a winter’s night a traveler,” for example, the reader is constantly interrupted by meta-level discussions of the act of reading and the nature of storytelling.

Needless to say, language models such as GPT-3 and GPT-4 have their detractors. They tend to be quite good with some prompts, but produce rather strange responses to others. In a previous Math Scholar article, we asked ChatGPT, which is based on GPT-3, to prove four well-known mathematical theorems:

- Most angles cannot be trisected with ruler and compass.

- $\pi$ is irrational.

- $\pi$ is transcendental.

- Every algebraic equation with integer coefficients has a root in the complex numbers.

While the responses were interesting, in each case they fell far short of a complete, rigorous proof. It is clear that for mathematical work, these systems still need improvement. Research mathematicians are in no danger of being unemployed.

In any event, these developments are raising some interesting questions as to how close we are to real artificial general intelligence [Economist]. As Australian cognitive scientist David Chalmers penned in April 2022, shortly after OpenAI released the earlier CPT-3 software [NY Times],

What fascinates me about GPT-3 is that it suggests a potential mindless path to artificial general intelligence. … It is just analyzing statistics of language. But to do this really well, some capacities of general intelligence are needed, and GPT-3 develops glimmers of them.

A 2011 Time article featured an interview with futurist Ray Kurzweil, who has predicted an era (he estimated by 2045), a “singularity,” when machine intelligence will meet, then transcend human intelligence [Time]. Such future intelligent systems will then design even more powerful technology, resulting in a dizzying advance that we can only dimly foresee at the present time [Kurzweil book].

Futurists such as Kurzweil certainly have their skeptics and detractors. But even setting aside technical questions, there are solid reasons to be concerned about the potential societal, legal, financial and ethical challenges of machine intelligence, as exhibited by the current backlash against science, technology and “elites.” As Kevin Roose writes in the New York Times, “We’re in a golden age of progress in artificial intelligence. It’s time to start taking its potential and risks seriously.”

Along this line, in March 2023 more than 1000 tech leaders and researchers signed an open letter urging a moratorium on the development of state-of-the-art AI systems, citing “profound risks to society and humanity.” Others have even claimed that the singularity is already here, or in other words that the genie is already out of the bottle.

However these disputes are settled, it is clear that in our headlong rush to explore technologies such as machine learning, artificial intelligence and robotics, we must find a way to humanely deal with those whose lives and livelihoods will be affected by these technologies. The very fabric of society may hang in the balance.