Introduction

Most of us learn the basic scheme for matrix multiplication in high school. What could be more simple and straightforward? Thus it may come as a surprise to some the basic scheme is not the most efficient.

German mathematician Volker Strassen was the first to show this, in 1969, by exhibiting a scheme that yields practical speedups even for moderately sized matrices. In the years since Strassen’s discovery, numerous other researchers have found even better schemes for certain specific matrix size classes. For a good overview of these methods, together with some numerical analysis and other considerations, see Higham2022.

The latest development here is that researchers at DeepMind, a research subsidiary of Alphabet (Google’s parent), have devised a machine learning-based program that has not only reproduced many of the specific results in the literature, but has also discovered a few schemes, for certain specific size classes, that are even more efficient than the best known methods. In this article, we present an introduction to these fast matrix multiplication methods, and then describe the latest results.

Strassen’s algorithm for matrix multiplication

Strassen’s algorithm is as follows. Consider matrices $A, B$ and $C$, which, for simplicity in the presentation here, may each be assumed to be of size $2n \times 2n$ for some integer $n$ (although the algorithm is also valid for more general size combinations). By decomposing each of these matrices into half-sized (i.e., $n \times n$) submatrices $A_{ij}, B_{ij}$ and $C_{ij}$, one can write

$$A =

\begin{bmatrix}

A_{11} & A_{12} \\

A_{21} & A_{22}

\end{bmatrix}, \quad

B =

\begin{bmatrix}

B_{11} & B_{12} \\

B_{21} & B_{22}

\end{bmatrix}, \quad

C =

\begin{bmatrix}

C_{11} & C_{12} \\

C_{21} & C_{22}

\end{bmatrix},$$

where

$$\begin{bmatrix}

C_{11} & C_{12} \\

C_{21} & C_{22}

\end{bmatrix}

=

\begin{bmatrix}

A_{11} B_{11} + A_{12} B_{21} &

A_{11} B_{12} + A_{12} B_{22} \\

A_{21} B_{11} + A_{22} B_{21} &

A_{21} B_{12} + A_{22} B_{22}

\end{bmatrix}.$$

With this decomposition, eight multiplications of $n \times n$ submatrices are required to produce the results. The total number of element-wise multiplications is the same as with the standard matrix multiplication scheme, so at first it appears that nothing has been gained. However, Strassen observed that if one computes seven intermediate $n \times n$ matrices

$$\begin{align}

P_1 &= (A_{11} + A_{22}) (B_{11} + B_{22}) \\

P_2 &= (A_{21} + A_{22}) B_{11} \\

P_3 &= A_{11} (B_{12} – B_{22}) \\

P_4 &= A_{22} (B_{21} – B_{11}) \\

P_5 &= (A_{11} + A_{12}) B_{22} \\

P_6 &= (A_{21} – A_{11}) (B_{11} + B_{12}) \\

P_7 &= (A_{12} – A_{22}) (B_{21} + B_{22}), \\

\end{align}$$

then one can write:

$$\begin{bmatrix}

C_{11} & C_{12} \\

C_{21} & C_{22}

\end{bmatrix}

=

\begin{bmatrix}

P_1 + P_4 – P_5 + P_7 &

P_3 + P_5 \\

P_2 + P_4 &

P_1 – P_2 + P_3 + P_6

\end{bmatrix}.$$

The important fact to note here is that computing each of the $P_k$ matrices requires only a single $n \times n$ matrix multiplication, so that the final result is obtained in only seven $n \times n$ matrix multiplications instead of eight with the conventional scheme.

This savings comes at a cost of 18 matrix additions/subtractions of size $n \times n$, compared with just four with the conventional scheme. This is certainly not an improvement in the simplest case $n = 2$, where the submatrices are scalars, but say when $n = 2^m$ for some moderately large $m$, a recursive application of Strassen’s scheme yields the final result in fewer total element-wise operations. In the present author’s experience (see Bailey1991), practical speedups can be achieved on present-day computer processors even for $n$ as small as say 128 or 256. Asymptotically, Strassen’s scheme has a complexity of $O(n^{\log_2(7)}) \approx O(n^{2.8074})$, compared with $O(n^3)$ for the conventional scheme.

Recent DeepMind achievements

As mentioned above, researchers at DeepMind, a research subsidiary of Alphabet (Google’s parent), have devised a machine learning-based search program that has not only reproduced many of the specific results in the literature, but has also discovered a few schemes, for certain specific size classes, that are even more efficient than the best known methods. First, we will briefly review some of DeepMind’s earlier achievements, upon which their new matrix work is based.

The ancient Chinese game of Go is notoriously complicated, with strategies that can only be described in vague, subjective terms. Many observers did not expect Go-playing computer programs to beat the best human players for many years, if ever. Then in May 2017, a computer program named “AlphaGo,” developed by DeepMind, defeated Ke Jie, a 19-year-old Chinese Go master thought to be the world’s best human Go player [NY Times]. Later that same year, a new DeepMind program named “AlphaGo Zero” defeated the earlier Alpha Go program 100 games to zero. After 40 days of training by playing games with itself, AlphaGo Zero was as far ahead of Ke Jie as Ke Jie is ahead of a good amateur player [Quanta Magazine].

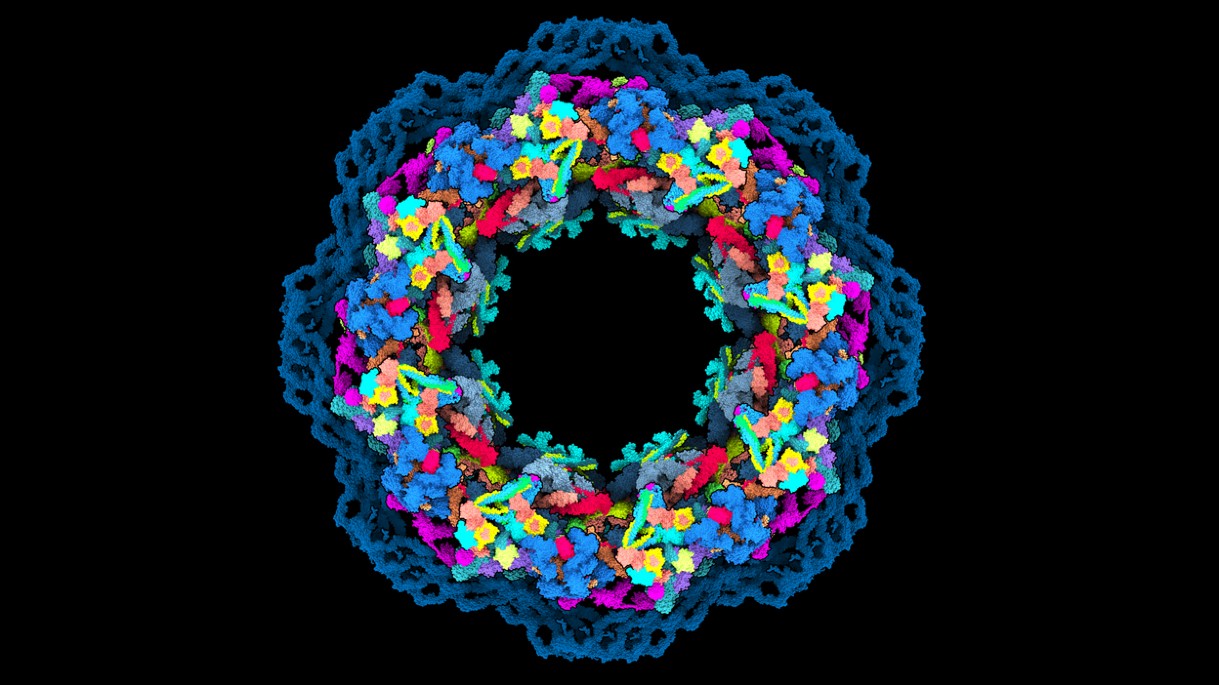

Model of human nuclear pore complex, built using AlphaFold2; credit: Agnieszka Obarska-Kosinska, Nature

Given the daunting challenge and importance of the protein folding problem, in 1994 a community of researchers in the field organized a biennial competition known as Critical Assessment of Protein Structure Prediction (CASP) [CASP]. In 2018, a program called AlphaFold, developed by DeepMind, won the competition. For the 2020 CASP competition, the DeepMind team developed a new program, known as AlphaFold 2 [AlphaFold 2], which achieved a 92% average score, far above the 62% achieved by the second-best program in the competition. “It’s a game changer,” exulted German biologist Andrei Lupas. “This will change medicine. It will change research. It will change bioengineering. It will change everything.” [Scientific American].

DeepMind’s AlphaTensor program for matrix multiplication

Building on these earlier successes, in 2022 researchers at DeepMind turned their tools to the analysis of matrix multiplication algorithms. They formulated the problem as a game, called TensorGame, where at each step the player selects how to combine different entries of the matrices to produce a matrix product. The scheme assigns a score for each selection, based on the number of operations required to reach a correct result.

To find optimal solutions to this problem, the researchers applied a “deep reinforcement learning” algorithm. The resulting program, called AlphaTensor, is a specialized neural network system employing a similar underlying design as the above-mentioned Go-playing and protein folding projects. The scheme employs a single agent to decompose matrix multiplication tensors of different sizes, which permits “learned” matrix multiplication techniques to be shared across different “players.” Full details of the scheme are described in a October 2022 Nature article by the DeepMind researchers.

The AlphaTensor program quickly discovered many of the existing matrix multiplication algorithm, including Strassen’s original algorithm and numerous others. But it also discovered several new algorithms not previously known in the literature. For example, for the product of two $4n \times 4n$ matrices, the previously most efficient algorithm was simply to apply Strassen’s algorithm recursively twice. This approach yields the result in $49$ block multiplications of size $n \times n$. AlphaTensor found a scheme that produces the result in $47$ block multiplications (although this result is valid only for matrices with binary elements). The DeepMind paper presented this scheme in full — it required a full page of small type. Similarly, for the product of two $5n \times 5n$ matrices, AlphaTensor found a scheme that produces the result in $96$ block multiplications of size $n \times n$, compared with $98$ in the literature (with the same restriction as mentioned above for $4n \times 4n$ matrices). Several other new results are listed in their paper, including three cases where their algorithms are applicable for standard real or complex matrices as well as for binary matrices.

The DeepMind researchers note that their results are focused on finding algorithms that minimize the total number of element-wise arithmetic operations in a matrix multiplication task. But the same AlphaTensor technology could also be used to optimize for other criteria, such as numerical stability or energy usage. And more generally, their overall methodology could be applied to a broad range of other mathematical problems as well.

In yet another interesting development, the publication of the DeepMind paper on 5 October 2022 has spurred some other researchers to take a fresh look at the problem. Jakob Moosbauer and Manuel Kauers at Johannes Kepler University Linz in Austria have now reported employing a different approach that improves on DeepMind’s method for $5 \times 5$ matrices — they reduced DeepMind’s $96$ block multiplications to $95$. Moosbauer and Kauers promise to release full details soon.

Where will this lead?

As we noted in an earlier Math Scholar article, schemes based on machine learning methods are doing much more than finding new mathematical or computer science results. They are also driving cars, writing code, investing money, creating new digital art, and even writing essays.

One of the most startling recent advances is a language model program called “Generative Pre-Trained Transformer 3” (GPT-3), developed by OpenAI, an organization established in 2015 by some prominent Silicon Valley technologists. Given a prompt, this program can generate surprisingly realistic text on a wide variety of topics. For example, when given the prompt “Write an essay discussing the role of metafiction in the work of Italo Calvino,” GPT-3 produced a five-paragraph essay that started [NY Times]:

Italian author Italo Calvino is considered a master of metafiction, a genre of writing in which the author breaks the fourth wall to discuss the act of writing itself. For Calvino, metafiction is a way of exploring the nature of reality and the ways in which stories can shape our perceptions of the world. His novels often incorporate playful, labyrinthine structures that play with the boundaries between reality and fiction. In “If on a winter’s night a traveler,” for example, the reader is constantly interrupted by meta-level discussions of the act of reading and the nature of storytelling.

Needless to say, language models such as GPT-3 have their detractors. They tend to be quite good with some prompts, but produce rather strange responses to others, particularly if the prompt has some inconsistent or nonsensical information.

In any event, these developments are raising some interesting questions as to how close we are to real artificial general intelligence [Economist]. As Australian cognitive scientist David Chalmers penned in April 2022, shortly after OpenAI released the CPT-3 software [NY Times],

What fascinates me about GPT-3 is that it suggests a potential mindless path to artificial general intelligence. … It is just analyzing statistics of language. But to do this really well, some capacities of general intelligence are needed, and GPT-3 develops glimmers of them.

A 2011 Time article featured an interview with futurist Ray Kurzweil, who has predicted an era (he estimated by 2045) when machine intelligence will meet, then transcend human intelligence [Time]. Such future intelligent systems will then design even more powerful technology, resulting in a dizzying advance that we can only dimly foresee at the present time [Book].

Futurists such as Kurzweil certainly have their skeptics and detractors. But even setting aside technical questions, there are solid reasons to be concerned about the potential societal, legal, financial and ethical challenges of machine intelligence, as exhibited by the current backlash against science, technology and “elites.” As Kevin Roose writes in the New York Times, “We’re in a golden age of progress in artificial intelligence. It’s time to start taking its potential and risks seriously.”

However these disputes are settled, it is clear that in our headlong rush to explore technologies such as machine learning, artificial intelligence and robotics, we must find a way to humanely deal with those whose lives and livelihoods will be affected by these technologies. The very fabric of society may hang in the balance.