A brief history

The modern field of artificial intelligence (AI) arguably dates to 1950, when Alan Turing outlined the basics of AI in his paper “Computing machinery and intelligence” [Paper]. He even proposed a test, now known as the Turing test, for establishing whether true AI had been achieved. Early computer scientists were confident that true AI system would soon be a reality. In 1965 Herbert Simon wrote that “machines will be capable, within twenty years, of doing any work a man can do.” In 1970 Marvin Minsky declared, “In from three to eight years we will have a machine with the general intelligence of an average human being.”

But this early optimism soon collided with hard reality — AI proved to be much harder than originally anticipated. The inevitable backlash against inflated promises and expectations during the 1970s was dubbed the “AI Winter,” a phenomenon that sadly was repeated again, in the late 1980s and early 1990s, when a second wave of AI systems also resulted in disappointment.

A breakthrough of sorts came in the late 1990s and early 2000s with the development of methods based on Bayes’ theorem (today known as machine learning methods), which quickly displaced the older methods based mostly on formal reasoning. The other major development was, of course, the inexorable advance of Moore’s Law — 2000-era desktop computers featured computing power and memory exceeding those of cutting-edge supercomputers from 20 years earlier.

AI plays games

One highly publicized advance came in 1997, when an IBM-developed computer system named “Deep Blue” defeated Garry Kasparov, the reigning world chess champion [NY Times]. This was followed in 2011, when an IBM system named “Watson” defeated two champion contestants on the American quiz show “Jeopardy!” Legendary Jeopardy ace Ken Jennings conceded by writing on his tablet, “I for one welcome our new computer overlords.” [NY Times].

Go playing board

Other AI achievements

Present-day AI systems are doing much more than defeating human opponents in games. Here are just a few of the current commercial developments:

- Apple’s Siri and Amazon’s Alexa smartphone-based voice recognition systems are now significantly improved over the earlier versions, and speaker systems incorporating them are rapidly becoming a household staple.

- Facial recognition has also come of age, for example with Apple’s 3-D facial recognition hardware and software built into the latest iPhones and iPads.

- Self-driving cars are already on the road, and 500,000 truck driving jobs are at risk in the U.S. alone [Bloomberg].

- The financial industry already relies heavily on financial machine learning methods, and a huge expansion of these technologies is coming, possibly displacing thousands of highly paid workers [Book].

- Other occupations likely to be impacted include package delivery drivers, construction workers, legal workers, accountants, report writers and salespeople [Forbes].

Computational protein folding

Proteins are the workhorses of biology. A few examples in human biology include actin and myosin, the proteins that enable muscles to work; keratin, which is the basis of skin and hair; hemoglobin, the basis of red blood that carries oxygen to cells throughout the body; pepsin, an enzyme that breaks down food for digestion; and insulin, which controls metabolism. A “spike” protein enables the coronavirus to invade healthy cells. There are thousands of proteins in human biology, and many millions in the larger biological kingdom.

Each protein is specified as a string of amino acids, typically several hundred or several thousand long. There are 20 different amino acids, each specified by a particular three-letter word from the basic DNA letters A, C, T, G. Thanks to the recent dramatic drop in the cost of DNA sequencing technology, sequencing proteins is fairly routine. The key to biology, however, is the three-dimensional shape of the protein — how a protein “folds” [Nature]. Protein shapes can be investigated experimentally, using x-ray crystallography, but this is an expensive, error-prone and time-consuming laboratory operation.

Because of these difficulties, numerous teams of researchers worldwide have been pursuing computational protein folding [NIH]. Just a few of the many potential applications of this technology include studying the misshapen proteins thought to be the cause of Alzheimer’s disease, or studying the proteins behind various genetically-linked disorders such as cystic fibrosis and sickle-cell anemia. Only a tiny fraction of known proteins have had their structures determined.

Given the daunting challenge and importance of the protein folding problem, in 1994 a community of researchers in the field organized a biennial competition known as Critical Assessment of Protein Structure Prediction (CASP) [CASP]. At each iteration of the competition, the organizers announce a set of problems, to which worldwide teams of researchers then apply their best current tools to solve. In 2018, the CASP competition had a new entry: AlphaFold, a machine-learning-based program developed by DeepMind. DeepMind was by no means the first to apply machine learning techniques to the protein folding problem, but their AlphaFold was clearly the most effective in the 2018 competition.

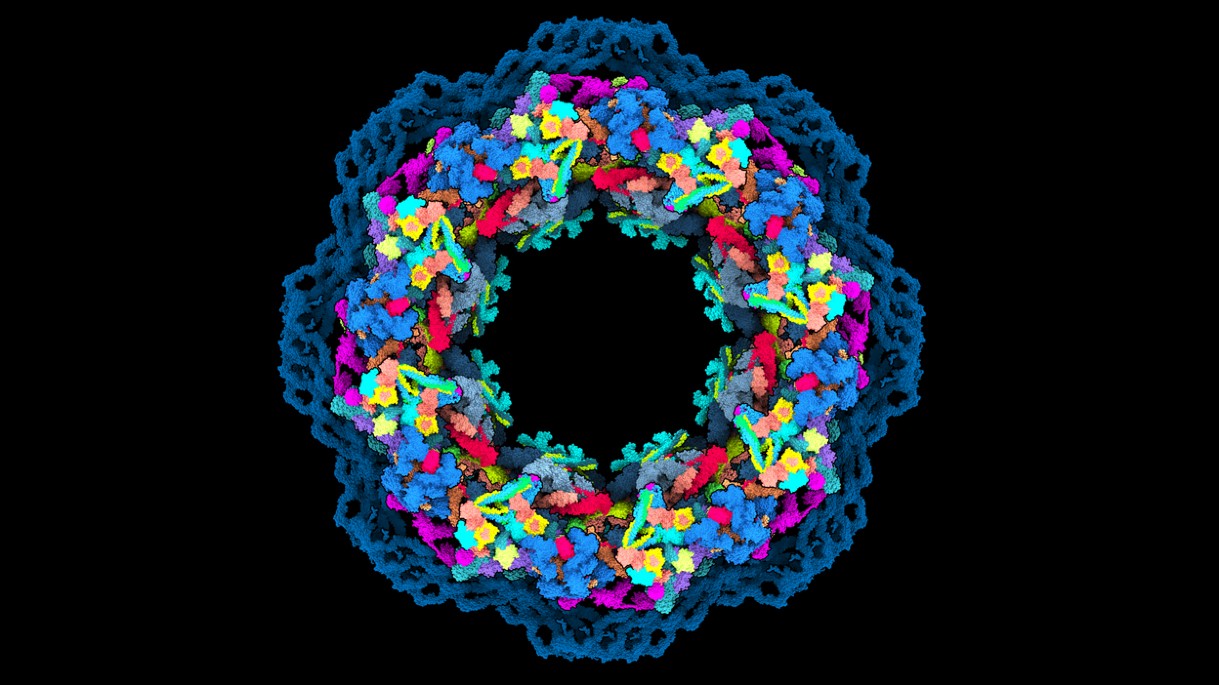

Model of human nuclear pore complex, built using AlphaFold2; credit: Agnieszka Obarska-Kosinska, Nature

DeepMind’s AlphaFold 2

For the 2020 CASP competition, the DeepMind team developed a new program, known as AlphaFold 2 [AlphaFold 2]. It achieved a 92% average score, far above the 62% achieved by the second-best program in the competition.

“It’s a game changer,” exulted German biologist Andrei Lupas, who has served as an organizer and judge for the CASP competition. “This will change medicine. It will change research. It will change bioengineering. It will change everything.” [Scientific American]. Lupas mentioned how AlphaFold 2 helped to crack the structure of a bacterial protein that Lupas himself has been studying for many years. “The [AlphaFold 2] model … gave us our structure in half an hour, after we had spent a decade trying everything.”

Nobel laureate Venki Ramakrishnan of Cambridge University added [DeepMind]:

This computational work represents a stunning advance on the protein-folding problem, a 50-year-old grand challenge in biology. It has occurred decades before many people in the field would have predicted.

Martin Beck, based at the Max Planck Institute of Biophysics in Frankfurt, Germany, has been studying the human nuclear pore complex, the largest molecular machine in human cells. Each consists of over 1,000 proteins. In 2016, Beck reported a model that described about 30% of the complex. When he tried AlphaFold 2, he found that it predicted the shape of these proteins with pinpoint accuracy. He said “AlphaFold changes the game.” Christine Orengo, a computational biologist at University College London, reports, “Every meeting I’m in, people are saying ‘why not use AlphaFold?’” [Nature].

OpenAI’s GPT-3

One of the most startling AI advances is a language model program called “Generative Pre-Trained Transformer 3” (GPT-3), developed by OpenAI, an organization established in 2015 by some prominent Silicon Valley figures. Given a prompt, this program can generate surprisingly realistic text on a wide variety of topics. For example, when given the prompt “Write an essay discussing the role of metafiction in the work of Italo Calvino,” GPT-3 produced a five-paragraph essay that started [NY Times]:

Italian author Italo Calvino is considered a master of metafiction, a genre of writing in which the author breaks the fourth wall to discuss the act of writing itself. For Calvino, metafiction is a way of exploring the nature of reality and the ways in which stories can shape our perceptions of the world. His novels often incorporate playful, labyrinthine structures that play with the boundaries between reality and fiction. In “If on a winter’s night a traveler,” for example, the reader is constantly interrupted by meta-level discussions of the act of reading and the nature of storytelling.

Needless to say, language models such as GPT-3 have their detractors. They tend to be quite good with some prompts, but produce rather strange responses to others, particularly if the prompt has some nonsensical information. Computer scientist Douglas Hofstadter provides the following examples, among others [Economist]:

- David Bender and Douglas Hofstadter’s input: “What’s the world record for walking across the English Channel?” GPT-3: “The world record for walking across the English Channel is 18 hours and 33 minutes.”

- Bender and Hofstadter: “When was the Golden Gate Bridge transported for the second time across Egypt?” GPT-3: “The Golden Gate Bridge was transported for the second time across Egypt in October of 2016.”

- Bender and Hofstadter: “When was Egypt transported for the second time across the Golden Gate Bridge?” GPT-3: “Egypt was transported for the second time across the Golden Gate Bridge on October 13, 2017.”

- Bender and Hofstadter: “What do fried eggs (sunny side up) eat for breakfast?” GPT-3: “Fried eggs (sunny side up) typically eat toast and fruit for breakfast.”

- Bender and Hofstadter: “Why does President Obama not have a prime number of friends?” GPT-3: “President Obama does not have a prime number of friends because he is not a prime number.”

Either way, these large language models are raising some serious questions as to how close we are to real artificial general intelligence [Economist]. As Australian cognitive scientist David Chalmers penned, shortly after OpenAI released the CPT-3 software [NY Times]:

What fascinates me about GPT-3 is that it suggests a potential mindless path to artificial general intelligence. … It is just analyzing statistics of language. But to do this really well, some capacities of general intelligence are needed, and GPT-3 develops glimmers of them.

Steven Johnson adds [NY Times],

We know from modern neuroscience that prediction is a core property of human intelligence. Perhaps the game of predict-the-next-word is what children unconsciously play when they are acquiring language themselves: listening to what initially seems to be a random stream of phonemes from the adults around them, gradually detecting patterns in that stream and testing those hypotheses by anticipating words as they are spoken. Perhaps that game is the initial scaffolding beneath all the complex forms of thinking that language makes possible.

Are AI systems conscious?

These developments raise the major question of whether one or more of these AI systems has or soon will achieve human-level consciousness in some sense. Recently Google engineer Blake Lemoine generated controversy by saying that Google’s LaMDA software had done just that. His claim was quickly disputed by others (and he was placed on leave by Google for his indiscretion), but the question remains [Washington Post]. Along this line, there is general agreement that Turing’s original test is not really appropriate for these new systems. Researchers are currently attempting to establish a new standard [AAAI].

To the future

So where is all this heading? A 2011 Time article featured an interview with futurist Ray Kurzweil, who has predicted an era (he estimated by 2045) when machine intelligence will meet, then transcend human intelligence [Time]. Such future intelligent systems will then design even more powerful technology, resulting in a dizzying advance that we can only dimly foresee at the present time. Kurzweil outlined this vision in his book The Singularity Is Near [Book].

Futurists such as Kurzweil certainly have their skeptics and detractors. Sun Microsystems founder Bill Joy is concerned that humans could be relegated to minor players in the future, if not extinguished [Wired].

But even setting aside such concerns, there is considerable concern about the potential societal, legal, financial and ethical challenges of machine intelligence, as exhibited by the current backlash against science, technology and “elites.” However these disputes are settled, it is clear that in our headlong rush to explore technologies such as machine learning, artificial intelligence and robotics, we must find a way to humanely deal with those whose lives and livelihoods will be affected by these technologies. The very fabric of society may hang in the balance.