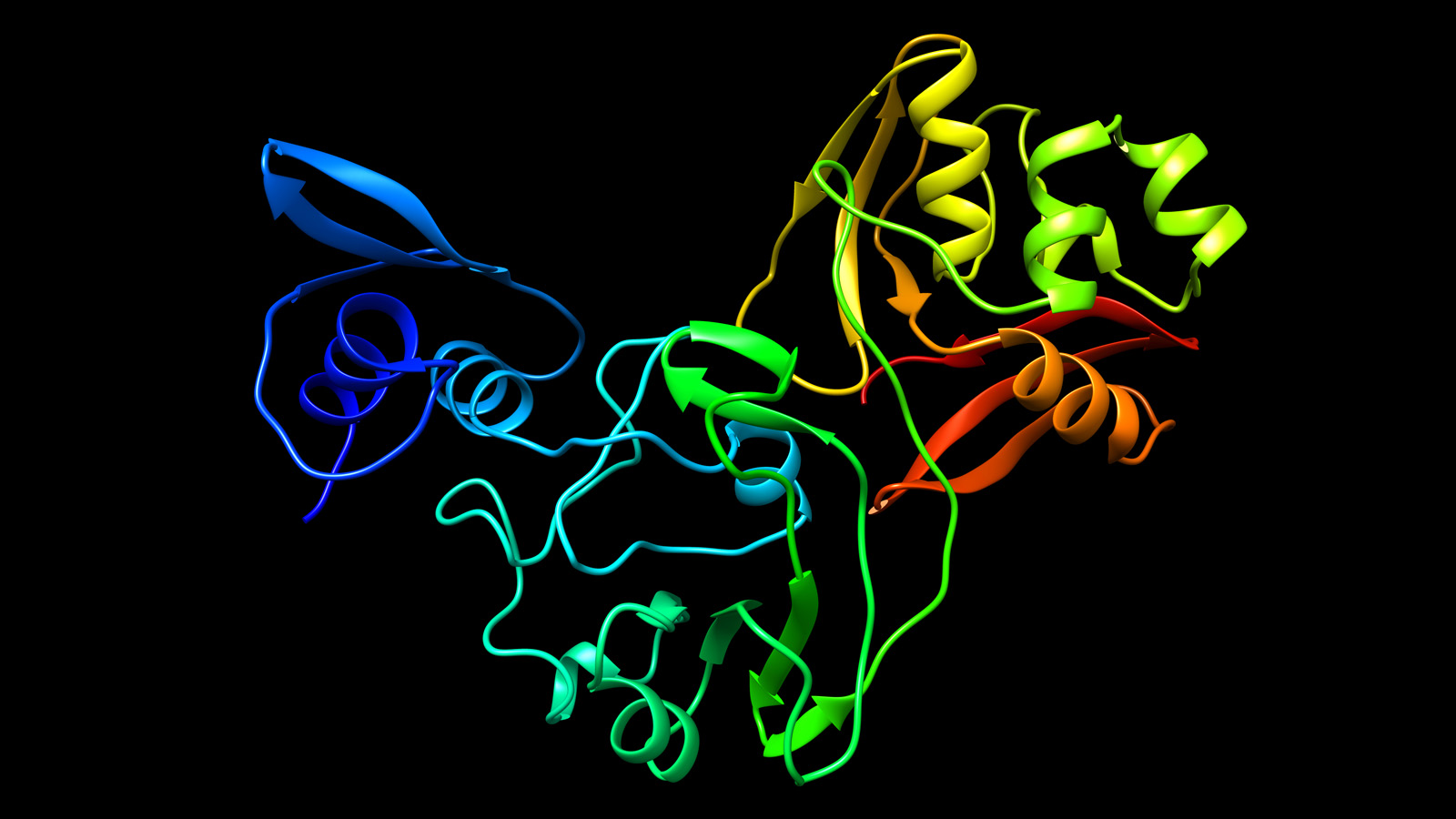

Structure of the Nsp 15 hexamer, a component of Covid-19 (Courtesy Argonne Natl Lab)

Protein folding

Proteins are the workhorses of biology. A few examples in human biology include actin and myosin, the proteins that enable muscles to work; keratin, which is the basis of skin and hair; hemoglobin, the basis of red blood that carries oxygen to cells throughout the body; pepsin, an enzyme that breaks down food for digestion; and insulin, which controls metabolism. A protein known as “spike” is the key for the coronavirus to invade healthy cells. And for every protein in human biology, there are many thousands of other proteins in the rest of the biological kingdom.

Each protein is specified as a string of amino acids, typically several hundred or several thousand long. There are 20 different amino acids, each specified by a particular three-letter word from the basic DNA letters A, C, T, G. Thanks to the recent dramatic drop in the cost of DNA sequencing technology, sequencing proteins is fairly routine.

The key to biology, however, is the three-dimensional shape of the protein once it is created by cell machinery — how a protein “folds.” Hydrophobic amino acids tend to congregate in the middle of the structure, so as to avoid the aqueous environment. Amino acids with a prevailing negative charge attract amino acids with a prevailing positive charge. Hydrogen bonds in the amino acid chain may lead to the formation of spirals or sheets.

Protein shapes can be investigated experimentally, using x-ray crystallography, but this is an expensive, error-prone and time-consuming laboratory operation, certainly not a task that can be economically performed on each of the millions of proteins whose structures are not yet known.

Computational protein folding

Because of these difficulties, numerous teams of researchers worldwide have been pursuing computational protein folding. Just a few of the many potential applications of this technology include studying the misshapen proteins thought to be the cause of Alzheimer’s disease, or studying the proteins behind various genetically-linked disorders such as cystic fibrosis and sickle-cell anemia. Only a tiny fraction of known proteins (roughly 170,000 of over 180,000,000) have had their structures determined, so there is much to be done. Obviously, the stakes are very high.

A protein folding calculation is typically constructed as a large global energy minimization problem, which, since a straightforward exhaustion of possibilities is computationally infeasible, is typically decomposed into a set of smaller local minimization problems, with results assembled to construct the larger structure. Some approaches to the problem are completely computational, from first principles; others employ, in part, tables of previously computed basic protein shapes.

The underlying computational task in protein folding calculations is often termed molecular dynamics. One team of many in the molecular dynamics field is led by David E. Shaw, founder of the D.E. Shaw mathematical hedge fund. His research team, which has designed a special-purpose system (“Anton”) for molecular dynamics computation, has twice been awarded (2009 and 2014) the Gordon Bell Prize from the Association for Computing Machinery.

While steady progress on protein folding has been reported by numerous teams worldwide, the consensus in the field is that the best programs and hardware platforms are still not up to the task of reliably solving full-scale, real-world protein folding problems in reasonable run times.

The CASP protein folding competition

Given the daunting challenge and importance of the protein folding problem, in 1994 a community of researchers in the field organized a biennial competition known as Critical Assessment of Protein Structure Prediction (CASP). At each iteration of the competition, the organizers announce a set of problems, to which worldwide teams of researchers then apply their best current tools to solve. When CASP started in 1994, the average score was only 4%; by 2016 the average score had risen to 36% — promising, but still far from acceptable.

In 2018, the CASP competition had a new entry: AlphaFold, a machine-learning-based program developed by DeepMind, a division of Alphabet (Google’s parent company). AlphaFold achieved a score of roughly 56%. DeepMind’s program was by no means the first to apply machine learning techniques to the protein folding problem, but it was clearly the most effective in the 2018 competition. We summarized this achievement and potential applications in a previous Math Scholar blog.

The DeepMind team certainly has credentials for state-of-the-art machine learning work. In March 2016, a DeepMind computer program named “AlphaGo” defeated Lee Se-dol, a South Korean Go master, 4-1 in a 5-game tournament, an achievement that many observers had not expected to occur for decades, if ever. Then in October 2017, DeepMind researchers developed from scratch a new program, called AlphaGo Zero, which was programmed only with the rules of Go and a simple reward function; then it was instructed to play games against itself. After just three days of training, the AlphaGo Zero program had advanced to the point that it defeated the earlier AlphaGo program 100 games to zero, and after 40 days of training, AlphaGo Zero’s performance was as far ahead of champion human players as champion human players are ahead of amateurs. For additional background, see this Economist article, this Scientific American article and this Nature article.

DeepMind’s AlphaFold 2

For the 2020 CASP competition, the DeepMind team decided to scrap its original AlphaFold program, and instead developed a new program, known as AlphaFold 2, from scratch. In the just-completed 2020 CASP competition (conducted virtually due to the Covid-19 pandemic), AlphaFold 2 achieved a 92.4% average score, far above the 62% achieved by the second-best program in the competition.

“It’s a game changer,” exulted German biologist Andrei Lupas, who has served as an organizer and judge for the CASP competition. “This will change medicine. It will change research. It will change bioengineering. It will change everything.”

Lupas mentioned how AlphaFold 2 helped to crack the structure of a bacterial protein that Lupas himself has been studying for many years. “The [AlphaFold 2] model … gave us our structure in half an hour, after we had spent a decade trying everything.”

Nobel laureate Venki Ramakrishnan of Cambridge University added:

This computational work represents a stunning advance on the protein-folding problem, a 50-year-old grand challenge in biology. It has occurred decades before many people in the field would have predicted.

DeepMind approached the protein folding problem as a “spatial graph,” where the nodes and edges connect the residues in close proximity, and then applied a neural network that attempts to interpret the structure of the graph. They then trained the system on a set of 170,000 publicly available protein structures. Some additional details and analyses are given in this DeepMind report. DeepMind promises a more detailed technical report in the coming months.

For additional perspectives, see this Scientific American article, this Economist article and this Ars Technica article.

Conclusion

With AlphaFold 2, the DeepMind team has achieved a significant breakthrough in a computational application that, unlike playing Go or chess, is indisputably of great potential importance to human health and society. We can only assume that the DeepMind team will further improve their software, and that their efforts and similar efforts by other research teams, coupled with increasingly powerful hardware targeted to machine learning applications, will ultimately achieve a capability to solve large-scale protein structures on a routine basis.

Further, it is inevitable that the machine learning technology at the heart of these research projects will be effectively applied in other arenas of modern science and technology. Already, significant advances have been achieved in mathematics, physics and finance, to name but three.

So where is all this heading? A recent Time article featured an interview with futurist Ray Kurzweil, who has predicted an era, roughly in 2045, when machine intelligence will meet, then transcend human intelligence. Such future intelligent systems will then design even more powerful technology, resulting in a dizzying advance that we can only dimly foresee at the present time. Kurzweil outlined this vision in his book The Singularity Is Near.

Futurists such as Kurzweil certainly have their skeptics and detractors. Sun Microsystem founder Bill Joy is concerned that humans could be relegated to minor players in the future, if not extinguished. There is already concern that AI systems, in many cases, make decisions that humans cannot readily understand or gain insight into.

But even setting aside such concerns, there is considerable concern about the potential societal, legal, financial and ethical challenges of machine intelligence, as exhibited by the current backlash against science, technology and “elites.” However these disputes are settled, it is clear that in our headlong rush to explore technologies such as machine learning, artificial intelligence and robotics, we must find a way to humanely deal with those whose lives and livelihoods will be affected by these technologies. The very fabric of society may hang in the balance.