Google’s quantum computing achievement

For at least three decades, teams of researchers have been exploring quantum computers for real-world applications in scientific research, engineering and finance. Researchers have dreamed of the day when quantum computers would first achieve “supremacy” over classical computers, in the sense that a quantum computer solving a particular problem faster than any present-day or soon-to-be-produced classical computer system.

In a Nature article dated 23 October 2019, researchers at Google announced that they have achieved exactly this.

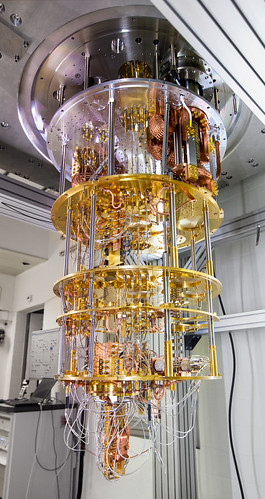

Google researchers employed a custom-designed quantum processor, named “Sycamore,” consisting of programmable quantum computing units, to create quantum states on 53 “qubits,” which, as they point out, corresponds to exploring a space of dimension 253, or approximately 1016. The task that the researchers chose was one that admittedly was specifically chosen to be well-suited for quantum computers: the team programmed the quantum computer to run a circuit that passes the 53 qubits through a series of random operations, in effect generating a string of pseudorandom zeroes and ones. The computer then calculated the probability distribution generated by these pseudorandom trials, by sampling the circuit task one million times and recording the results.

The Google researchers’ Sycamore quantum computer system completed the task in only 3 minutes 20 seconds. They claimed that the task would require 10,000 years, even on a present-day supercomputer with 1,000,000 processors.

For additional details, see the Google researchers’ Nature paper, this Nature summary, and this New Scientist report.

IBM: Not so fast!

The ink was barely dry on Google’s announcement when IBM released a technical paper arguing that Google’s comparison to a classical computer is flawed. The IBM researchers argue that the classical computation the Google researchers outlined (but did not actually perform) did not take full advantage of a typical present-day supercomputer’s storage system. When a storage system is strategically employed in the computation, IBM argues that the hypothetical run would complete in only 2.55 days on the “Summit” supercomputer at Oak Ridge National Laboratory, running at 87.4 Pflop/s (i.e., 87.4 quadrillion floating-point operations per second, or 8.74 x 1016 floating-point operations per second). IBM further argues that their computation could produce solutions with higher fidelity than the Sycamore quantum computer.

As Ciaran Gilligan-Lee of University College London observes,

Classical computers have such a large suite of things built into them that if you don’t utilise every single thing you leave yourself open for a tweaked classical algorithm to beat your quantum one.

At the least, Gilligan-Lee argues that claims of quantum supremacy “should be taken with a grain of salt.”

Another criticism of the Google team’s claim is that the particular benchmark problem they selected, namely to produce statistics from generating large numbers of pseudorandom numbers, is a highly artificial task, one that in no way reflects present-day real-world high-performance scientific computing. The pseudorandom number task is completely different from problems such as computationally simulating the earth’s climate for several decades into the future, to better understand different strategies for combatting global warming, computationally exploring the space of inorganic materials for improved solar cells or superconductors, or dynamically optimizing an investment portfolio.

One final criticism is that Google’s quantum processor is only one of many similar projects, some of which have already achieved significant success. The Canadian company D-Wave, for instance, has been producing quantum computers for several years, focusing on systems designed specifically for “quantum annealing,” namely a particular type of minimization or maximization calculation that nonetheless has broad applicability to real-world problems.

Historical perspective: Parallel versus vector supercomputers

In the 1970s and 1980s, most high-end supercomputers were based on a pipelined vector architecture, where a processor performed, say, 64 identical arithmetic or logical operations on 64 sets of arguments, in a zipper-like pipelined stream. But beginning in the early 1990s, systems with a highly parallel architecture, characterized by numerous independent processor-memory nodes connected in a network, became commercially available. The high-performance computing world raced to explore these new highly parallel systems for large-scale scientific and engineering computations.

Unfortunately, however, many researchers using these systems, in their zeal to be part of a new computing movement, began to publish performance levels and comparisons with prevailing vector computers that were not carefully done. Questionable performance reports were seen in papers from universities and government laboratories as well as private industry. The present author and some others in the high-performance scientific computing field grew concerned that, in many cases, exuberance was being transformed into exaggeration and even borderline fraud, hardly becoming of rigorous, sober scientific research.

Some of the questionable practices included (see also this paper):

- Performing calculations on a small parallel systems, but reporting results scaled to a full-sized parallel system merely by multiplication (i.e., claiming runs on a system with 4096 processors, but only performing runs on a system with 1024 processors, with performance results multiplied by four).

- Reporting performance in units of operations per second, but employing inefficient algorithms that result in artificially inflated performance levels.

- Comparing highly tuned parallel computer runs with untuned programs on a vector computer system.

- Not actually performing a claimed computation on the system being compared to, but instead employing a highly questionable “rule of thumb” conversion factor.

- Employing questionable performance plots, often with extrapolated data points not clearly acknowledged in the text of the paper.

- Omitting key details in the technical paper, such as whether the computation was performed using 64-bit floating-point arithmetic (approximately 16 decimal digit accuracy) or 32-bit floating-point arithmetic (approximately 7 decimal digit accuracy, which usually runs faster but produces much less accurate final results).

At first, the present author warned the community by means of a widely circulated humorous essay; when this stratagem failed, he published a more serious technical paper that directly quoted from a number of the questionable papers (and he endured considerable sniping from some in the community as a result).

Partly as a consequence of these exaggerated performance claims, many universities and laboratories became disappointed and disillusioned with the parallel computers that they had purchased, and the parallel computing field languished. Only about ten years later, after substantially improved highly parallel computers became available, and after significantly more realistic performance benchmarks and practices were adopted in the high-performance computing community, did highly parallel computing finally gain widespread acceptance.

For additional details, see this talk by the present author.

Towards more rigorous quantum computer performance reporting

Spanish-American philosopher George Santayana once quipped, “Those who cannot remember the past are condemned to repeat it.”

With the rise of quantum computers, as they challenge the long-running reign of highly parallel computers, we are at a juncture quite similar to the rise of parallel computer systems in the early 1990s, as they challenged the long-running reign of vector computers. In this era, as in the previous era, it is crucial that researchers, in their enthusiasm to explore the new architectures, not set aside basic principles of rigorous scientific methodology, objectivity and reproducibility.

To that end, here are some suggestions to avoid learning lessons of the past the “hard” way:

- The quantum computing field must establish clear standards for performance analyses.

- Editors of journals and referees of papers must be vigilant in enforcing these standards.

- Government funding agencies must support these standards efforts.

- Community-developed and community-endorsed benchmarks are a must.

- Benchmarks should include problems to measure specific features of a system, as well to assess performance on full-scale real-world problems typical of those that the community agrees are truly representative of computations that will actually be performed on such systems.

- Both leaders and bench-level researchers need to be frank about shortcomings of these new systems — such hard-hitting frankness is essential for the field to move forward.

The present author is as hopeful as anyone that these quantum computing systems will be successful. But let’s explore these systems with our eyes open!